Jordan Wirfs-Brock

Research

This page includes some of the projects I’ve done as a PhD student in Information Science at the University of Colorado. The methods I work with range from qualitative interviews to speculative design to data visualization to computational social science to participatory design.

Transmodal News Behaviors | Conversational Sonification | Recipes For Breaking Data Free | Quantuition

Transmodal News Behaviors: Studying How People Interact with News Across Technologies

The changing media technology landscape has thrown the news industry into upheaval. Simultaneously, the ubiquity of mobile devices, abundance of digital news outlets, and growing role of social media have disrupted how people find news content. Faced with media convergence and diffuse news content, people are now cast as the (sometimes accidental) designers and curators of their own personal news and information ecosystems.

In this project, completed during an internship on Yahoo’s User Experience Research team with Katie Quehl (summer 2018), we conducted a two-week diary study with 14 people across the U.S. to understand how they interact with news content across different media types, sources, platforms and devices.

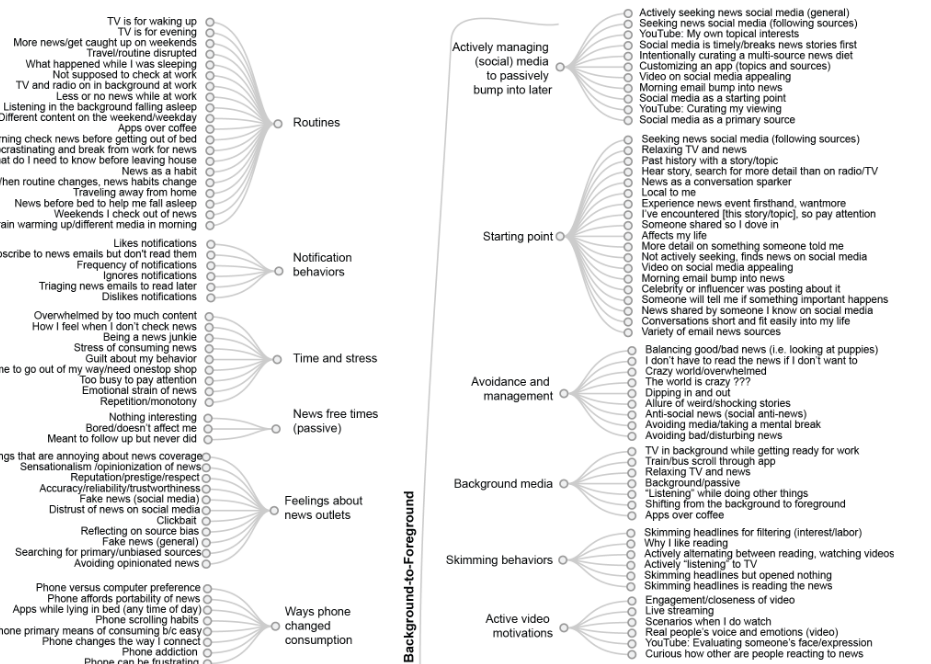

Our study revealed that news is a background-to-foreground behavior, where inciting factors such as a personal history with a news topic can inspire people to switch from passive to active news behaviors. We also observed the importance of background news in managing the social and emotional labor of keeping up with the news, the role of news in maintaining social relationships, and the potential to tap into self-tracking behavior as a way to encourage healthy news consumption. These contributions can help designers to create news platforms that empower news users take control of their own news habits and navigate the overwhelming news and information ecosystem.

Finally, we make the case for how and why a transmodal approach, which attends specifically to the transitions between technology-mediated experiences, can be applied to understand motivations and behaviors.

Journal article: Jordan Wirfs-Brock and Katie Quehl. 2019. News From the Background to the Foreground: How People Use Technology To Manage Media Transitions: A Study of Technology-mediated News Behaviors in a Hyper-connected World. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 3, 3, Article 110 (September 2019), 19 pages. DOI: https://doi.org/10.1145/3351268 | PDF | view in ACM digital library

Presentation slides (talk given at UbiComp 2019)

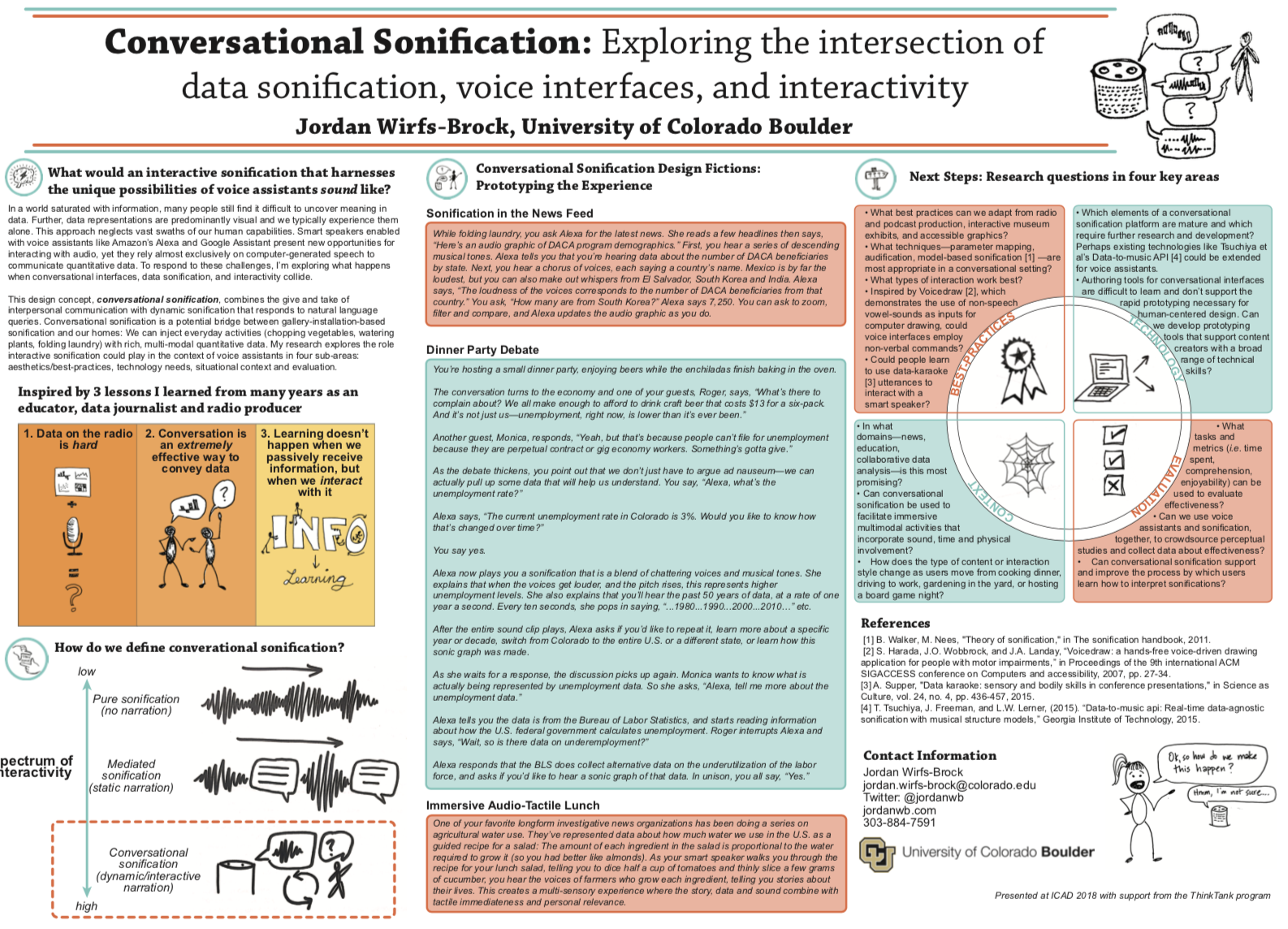

Conversational Sonification

Smart speakers and voice assistants present new opportunities for interacting with audio, yet they rely almost exclusively on computer-generated speech to convey information. At the same time, data sonification — like visualization, but for the ears — hasn’t appeared in the right environments for people to learn how to listen to data. To respond to these challenges, I’m exploring what happens when voice user interfaces, data sonification, and interactivity collide. What would an interactive data story that people can engage with via a voice assistant sound like? Imagine:

While folding laundry, you ask your voice assistant for the latest news. She reads a few headlines then says, “We’ve also got an audio graphic of the demographics of people in the DACA program. Would you like to interact with it?” You say yes. First, you hear descending musical tones. Your voice assistant says you’re hearing a representation of the number of DACA beneficiaries, by state. Next, you hear a chorus of voices, each saying a country’s name. Mexico is the loudest, but you can make out whispers from El Salvador, South Korea and India. Your voice assistant says, “The loudness of the voices corresponds to the number of DACA beneficiaries from that country.” You ask, “How many are from South Korea?” Your voice assistant tells you 7,250. You can ask to zoom, filter and compare; your voice assistant updates the audio graphic as you do.

This design concept, conversational sonification, combines the give and take of interpersonal communication with dynamic data sonification that responds to natural language queries. The widespread adoption of smart speakers and rapid expansion of voice assistant developer tools present new, unexplored territory for exploring audio interfaces. Yet this medium is still searching for its killer app. Smart speakers have unique affordances: When we speak, we enter a conversational mode of thinking and learning; and users aren’t tethered to a screen, but interact with content while embedded in their homes, workplaces and environments. My research uses a human-centered computing approach to explore how emerging voice ecosystems can offer news ways for people to interact with each other and with information.

This ongoing project that combines speculative design, user experience research, interaction design, and data sonifcation. Below, you can see early presentations of this work as a lightning talk at Women Techmakers/GDG Boulder 2018 and a poster presented as part of the ThinkTank graduate student consortium at the International Conference on Auditory Display (ICAD) 2018:

Recipes For Breaking Data Free

Do you ever feel like your data doesn't belong to you? This design project explores how the pre-defined ways personal biometric data is served to us hinder — or enhance — our ability to find meaning in our data. Through a series of recipes for novel data interactions and performances of those recipes, it presents alternative modes for experiencing data that is about us, yet not solely controlled by us.

In this project, I created “slow” data sonifications, like this one:

These sonifications expose the hidden labor in representing and interpreting data and provides a stark contrast to the “fast” prefab data representations that typically come with personal tracking devices.

Visit the project website to listen to more sonifications and read reflections on the project.

Published as a Provocation in DIS 2019: Jordan Wirfs-Brock. 2019. Recipes for Breaking Data Free: Alternative Interactions for Experiencing Personal Data. In Companion Publication of the 2019 on Designing Interactive Systems Conference 2019 Companion (DIS '19 Companion). ACM, New York, NY, USA, 325-330. DOI: https://doi.org/10.1145/3301019.3323892 | PDF | View in ACM digital library

View the poster (high res PDF)

I originally created this project as coursework for Critical Technical Practice, Fall 2018, taught by Laura Devendorf.

Quantuition: Exploring the Future of Representing Biometric Data

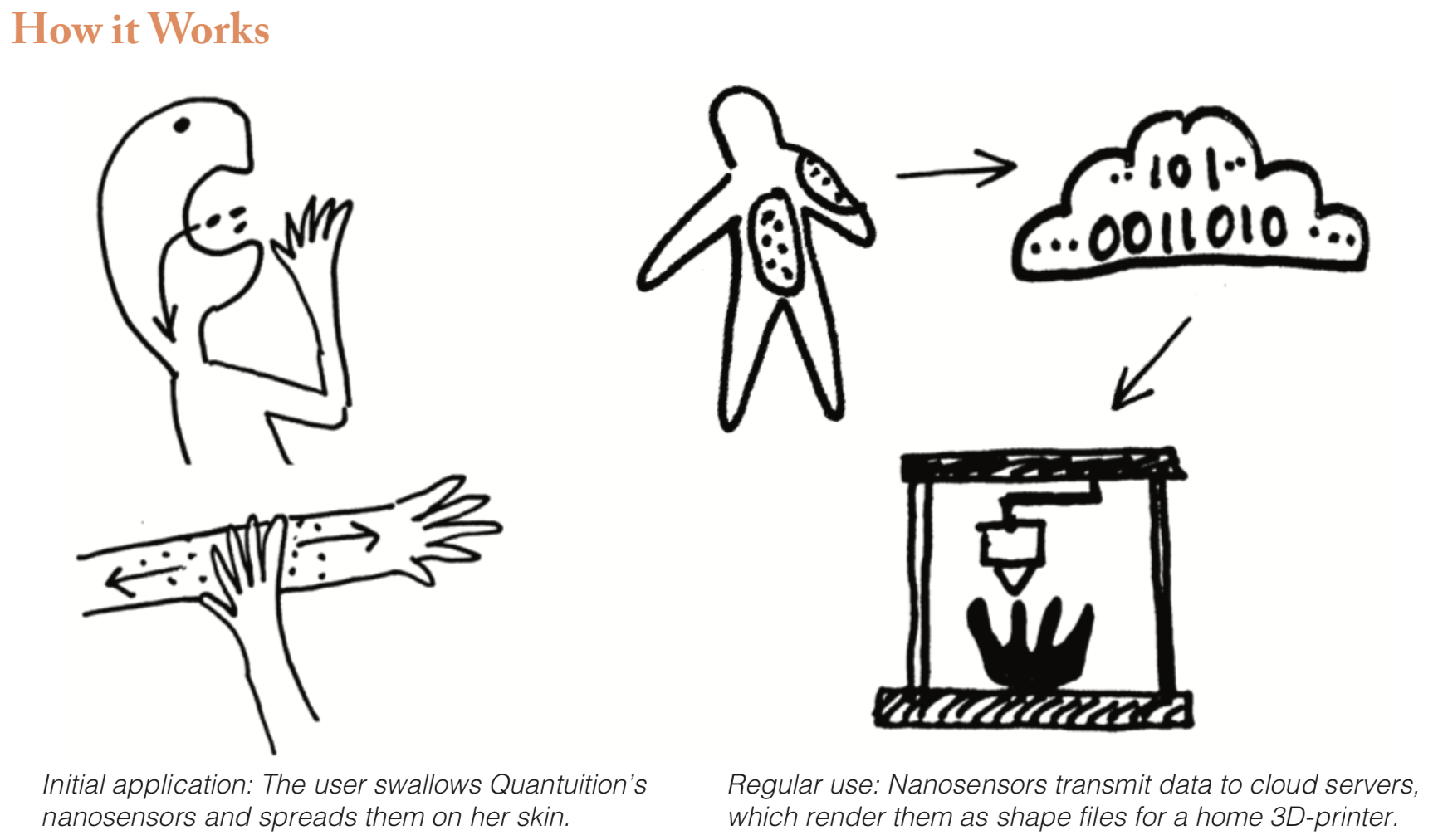

In a future where we track everything, how will data representations dictate how we relate to ourselves and the world? This project explores the relationship between personal biometric data and the meaning we find in it. I designed a speculative self-tracking system, Quantuition, that collects data from body-based nanosensors. The system renders that data into 3D data sculptures. Presented in the form of an Instagram feed, this speculation highlights how data-design influences the process of individual and social sense-making. We often ascribe power and authority to data representations — while simultaneously overlooking the hidden decisions embedded in those representations about what to measure, analyze, emphasize and display.

When self-tracking becomes pervasive, are we ruled by data or do we rule it? In the near future, personal sensors track everything: how fast our hair is growing, the amount of dust we inhale, how many tears we cry. As we become aware of these myriad personal data points, they could overwhelm us. How do we draw meaning from this data? How do our interpretations of this data influence our actions, and what are the implications of these new feedback loops?

This project produced several provocations for tangible data futures:

- What new interpersonal interactions does data physicalization uncover?

- What positive and negative feedback loops are present in a hyper-quantified future?

- How will emerging technology shape the relationship between data representations and actions?

- Where does the user’s control – and free will – begin and end?

I submitted this work to the TEI 2019 Student Design Competition, where it was won the award for best concept/design.

Read the paper | View the poster (high res PDF)