Jordan Wirfs-Brock

Research

This page includes some of the research projects I’ve done as a PhD student in Information Science at the University of Colorado, ranging from speculative and critical design, to data visualization, to designing for accessibility.

Conversational Sonification | Recipes For Breaking Data Free

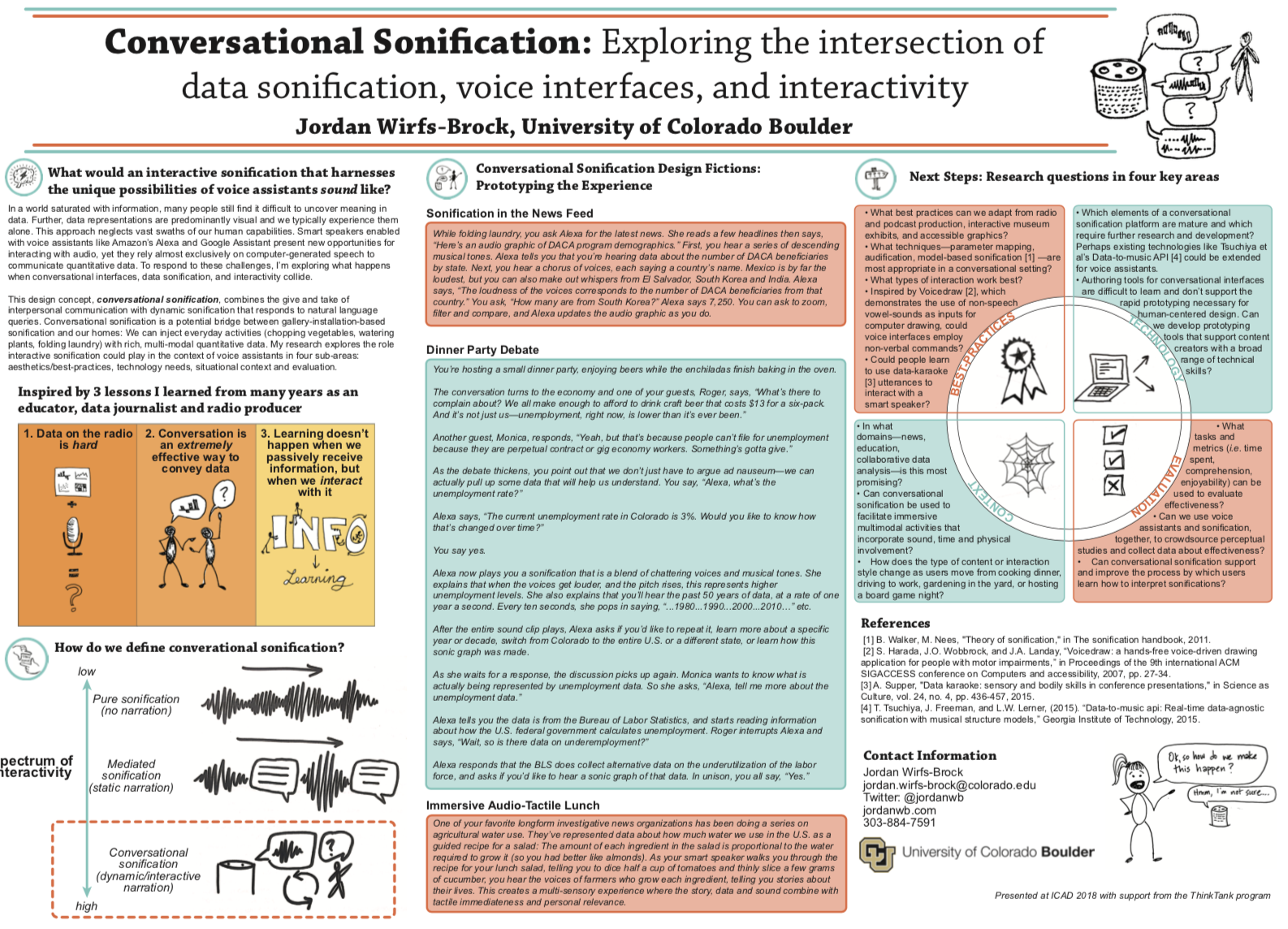

Conversational Sonification

Smart speakers and voice assistants present new opportunities for interacting with audio, yet they rely almost exclusively on computer-generated speech to convey information. At the same time, data sonification — like visualization, but for the ears — hasn’t appeared in the right environments for people to learn how to listen to data. To respond to these challenges, I’m exploring what happens when voice user interfaces, data sonification, and interactivity collide. What would an interactive data story that people can engage with via a voice assistant sound like? Imagine:

While folding laundry, you ask your voice assistant for the latest news. She reads a few headlines then says, “We’ve also got an audio graphic of the demographics of people in the DACA program. Would you like to interact with it?” You say yes. First, you hear descending musical tones. Your voice assistant says you’re hearing a representation of the number of DACA beneficiaries, by state. Next, you hear a chorus of voices, each saying a country’s name. Mexico is the loudest, but you can make out whispers from El Salvador, South Korea and India. Your voice assistant says, “The loudness of the voices corresponds to the number of DACA beneficiaries from that country.” You ask, “How many are from South Korea?” Your voice assistant tells you 7,250. You can ask to zoom, filter and compare; your voice assistant updates the audio graphic as you do.

This design concept, conversational sonification, combines the give and take of interpersonal communication with dynamic data sonification that responds to natural language queries. The widespread adoption of smart speakers and rapid expansion of voice assistant developer tools present new, unexplored territory for exploring audio interfaces. Yet this medium is still searching for its killer app. Smart speakers have unique affordances: When we speak, we enter a conversational mode of thinking and learning; and users aren’t tethered to a screen, but interact with content while embedded in their homes, workplaces and environments. My research uses a human-centered computing approach to explore how emerging voice ecosystems can offer news ways for people to interact with each other and with information.

This ongoing project combines speculative design, user experience research, interaction design, and data sonifcation. Below, you can see early presentations of this work as a lightning talk at Women Techmakers/GDG Boulder 2018 and a poster presented as part of the ThinkTank graduate student consortium at the International Conference on Auditory Display (ICAD) 2018:

Recipes For Breaking Data Free

Do you ever feel like your data doesn't belong to you? This design project explores how the pre-defined ways personal biometric data is served to us hinder — or enhance — our ability to find meaning in our data. Through a series of recipes for novel data interactions and performances of those recipes, it presents alternative modes for experiencing data that is about us, yet not solely controlled by us.

In this project, I created “slow” data sonifications, like this one:

These sonifications expose the hidden labor in representing and interpreting data and provides a stark contrast to the “fast” prefab data representations that typically come with personal tracking devices.

Visit the project website to listen to more sonifications and read the project report.

I created this project as coursework for Critical Technical Practice, Fall 2018, taught by Laura Devendorf.